Goodbye MapReduce, Hello Spark

For those of you not familiar with Spark, it is a cluster computing framework developed in AMPLab at UC Berkeley. Unlike MapReduce, which writes its data to disk between steps, Spark attempts to perform all of its computations in memory which can yield significant performance improvements. It is generally thought that Spark is ideal for working with data that can fit in a cluster’s memory, but it has also shown better performance than MapReduce when working with data on disk.

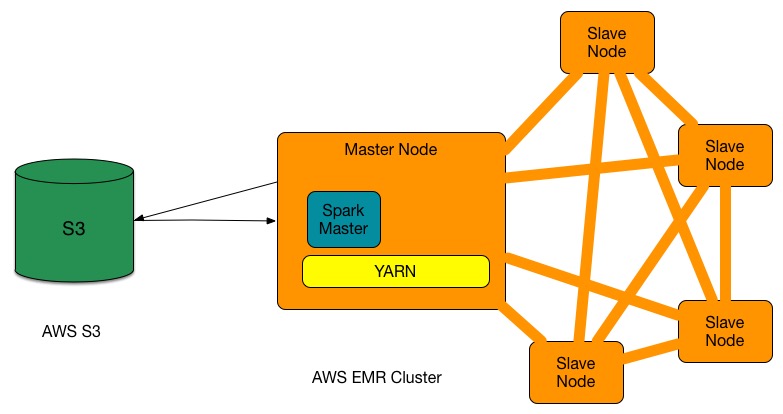

This post isn’t meant to be a how-to, but rather to explain our motivation behind the migration of the entire pipeline (batch and streaming) to Spark. The reality also isn’t quite as dramatic or as abrupt as the title would suggest. We’ve been using Spark in one form or another since mid 2013. We started using it to write streaming jobs to inform our predictive models and populate real-time dashboards. About a year ago, we brought in Databricks cloud, a fully managed spark cluster, to do ad-hoc data analysis, reports, and investigations. All that was left to complete the switch to Spark was to migrate our batch processing pipeline. This turned out to be relatively low effort since we were using Scalding’s Typesafe API to write our MapReduce jobs. Our biggest challenges actually came when trying to run Spark on YARN/EMR, but more on that in a later blog post.

Now that we’ve been running our entire data pipeline on Spark for the last several months, what have we gained?

- A single framework on which we write all of our jobs.

- Code re-use between different jobs and different types of jobs (Streaming vs. Batch).

- A 2x performance improvement in our batch jobs.

- Spark and Databricks has enabled us to open up the platform to data scientists and product managers.

- The REPL makes the feedback cycle much quicker when developing on Spark.

- On the back-end, we’re primarily a Scala team, and Spark code fits in better with the Scala paradigm.

In addition to this recent migration of batch jobs to Spark, we were also able to simplify our infrastructure a bit. Before, we had streaming clusters running on Mesos, Scalding Jobs on a CDH cluster, and a stand-alone DataBricks cluster on AWS instances. While we kept the DataBricks cluster, we got moved off of CDH and Mesos, and settled on AWS EMR clusters allowing us to standardize on ops.

We’re sponsoring Spark Summit, so stop by our booth and say hi.